Blog Details

Data Protection & Compliance in the Middle East: GDPR, HIPAA & Data Residency for AI Support

As businesses in the Middle East accelerate digital transformation, artificial intelligence (AI) is becoming central to customer support, sales, and marketing operations. From AI chatbots answering customer queries to voice assistants handling appointments and AI copilots assisting agents, these systems process large volumes of personal and sensitive data. That makes data protection and regulatory compliance not just a legal checkbox but a business-critical capability.

This article is a practical, in-depth guide for CTOs, compliance leads, product teams, and CX owners working with AI-powered support systems in the Middle East. We’ll cover the regulatory landscape (GDPR, HIPAA, regional laws and data residency expectations), specific risks introduced by AI, technical and organizational controls you should implement, sector-specific requirements (healthcare, finance), and a step-by-step compliance roadmap. We’ll also include regional context and stats relevant to the UAE and MENA markets, and finish with why Eyaana is the best choice for enterprises seeking an AI first sales and marketing solution that meets modern data protection requirements.

Why data protection matters for AI support

AI-powered support systems change the game in three ways:

-

Scale of data processing: AI chatbots and interactions generate, store, and analyze massive conversation logs, voice recordings, and metadata.

-

Sensitivity of data: Support conversations often include personally identifiable information (PII), financial details, medical queries, and other sensitive elements.

-

Opaque decision-making: Many AI models behave as black boxes; understanding why a model made a particular suggestion is often non-trivial—this matters for audit, accountability and fairness.

Failing to protect data or comply with regulations can lead to costly fines, reputational damage, customer churn, and in regulated industries (healthcare, finance) potential legal exposure. For companies in the UAE and the broader Middle East—where business ecosystems are increasingly global and customers are privacy-aware—embedding strong data protection into AI support is essential.

Snapshot: The regional regulatory environment (what you need to know)

The Middle East is not a single regulatory regime. However, several themes are common:

-

Global regulations matter locally. If you handle data of EU residents or process data through EU-based services, the EU General Data Protection Regulation (GDPR) can apply. Many multinational organizations in the region therefore treat GDPR as a baseline.

-

Sector-specific rules matter. For healthcare data, HIPAA (for US-related patient data) or local healthcare privacy laws apply; financial services have their own compliance obligations.

-

National laws and data residency expectations are emerging rapidly. The UAE and several Gulf countries have enacted or updated data protection laws and guidance that emphasize data subject rights, security obligations, and sometimes data residency. Some free zones (e.g., DIFC) have their own data protection frameworks that mirror international standards.

-

Cross-border data transfer scrutiny. Authorities care about where data is hosted and whether it moves across borders without safeguards.

Quick regional stats & context (business-focused)

-

Digital adoption in the UAE is extremely high: a large majority of consumers use online and mobile channels for services—this increases volumes of personal data processed by AI systems.

-

Regional enterprises are accelerating cloud and AI investments: a significant portion of businesses plan to expand AI use in customer-facing functions within the next 2–3 years.

-

Multilingual and multicultural customer bases in GCC countries make conversational AI and omnichannel support common sources of sensitive, context-rich data.

Note: Numbers above reflect market trends observed in the region—treat them as directional context for prioritizing compliance efforts.

Key regulations to understand

1. GDPR (EU General Data Protection Regulation)

Although a European law, GDPR is globally influential and can apply to Middle Eastern companies when:

-

They offer goods/services to EU residents, or

-

They monitor behavior of people in the EU.

Key GDPR obligations relevant to AI support:

-

Lawful basis for processing (consent, legitimate interest, contract, etc.).

-

Data subject rights (access, rectification, erasure, portability, restriction, objection).

-

Data Protection Impact Assessments (DPIAs), mandatory for high-risk processing—this often includes large-scale profiling or automated decision-making.

-

Data breach notification (72 hours where feasible).

-

Adequate safeguards for international transfers (Standard Contractual Clauses, adequacy decisions).

Implication for AI support: If your chatbot logs and profiles EU users, you need to implement mechanisms for consent, DPIAs, and quick incident response.

2. HIPAA (Health Insurance Portability and Accountability Act)

HIPAA is a U.S. law focused on protecting patient health information (PHI). It becomes relevant when:

-

You process US-based patient data (e.g., telehealth calls routed through your platform).

-

You are a business associate of a HIPAA-covered entity.

Key HIPAA obligations:

-

Safeguards for PHI (administrative, physical, technical).

-

Business Associate Agreements (BAAs) with third-party service providers.

-

Breach notification rules and strong logging/auditing.

Implication for AI support: If your AI support handles health inquiries, appointment details, or identifies patients, you must apply HIPAA-caliber controls (encryption, access controls, BAAs) and restrict data flows appropriately.

3. Regional & national laws (examples)

-

UAE Personal Data Protection Law (PDPL) and regulations: Many Gulf countries now have or are updating PDP laws emphasizing consent, data subject rights, and processing limits. Free zones (e.g., DIFC Data Protection Law) have similar frameworks aligned with international best practices.

-

Saudi PDPL & other GCC regulations: Several GCC states update their privacy laws to reflect international norms and local cultural sensitivities.

-

Sector-specific regulations: Central banks, health authorities, and telecom regulators may require specific controls for financial and health data.

Implication for AI support: Understand which national laws apply based on customer location, hosting location, and your corporate presence. Free zones may have additional requirements.

Data residency and localization: what "keeping data in-country" actually means

Data residency laws specify where data must be stored or processed. Requirements range from voluntary “preference” to strict legal mandates. Common varieties:

-

Mandatory residency: Certain data types (e.g., government records or health records) must be stored within national borders.

-

Conditional residency: Residency required unless adequate safeguards are in place for transfer (SCCs, binding corporate rules).

-

No residency requirement: Data may cross borders but transfers are regulated.

Design considerations for AI support:

-

Architect for locality: Choose cloud and hosting options that let you keep data in-country or within approved regions (e.g., UAE datacenters).

-

Segregate sensitive data: Store only metadata in global systems; keep sensitive PII/PHI in localized storage.

-

Hybrid architectures: Edge ingestion + regional processing + aggregated, anonymized insights centrally.

-

Contractual safeguards: Use contractual clauses, encryption, and controlled access if cross-border transfers are necessary.

AI-specific privacy risks and mitigation

AI introduces new privacy risks beyond traditional processing:

Risk: Unintended inference & profiling

AI can infer sensitive attributes (health, political views) from seemingly innocuous data.

Mitigation:

-

Conduct a DPIA focused on profiling risk.

-

Limit retention of raw conversational data.

-

Apply strict access controls and role-based access to model outputs that infer sensitive attributes.

Risk: Model leakage and data memorization

Large models can memorize and inadvertently reproduce training data.

Mitigation:

-

Avoid training on raw PII without anonymization/pseudonymization.

-

Use differential privacy techniques for model training where feasible.

-

Monitor model outputs for data leakage during testing.

Risk: Lack of explainability

Black-box AI can make decisions that are hard to explain to regulators or customers.

Mitigation:

-

Preserve logs of inputs, model versions, and outputs for audit.

-

Implement model interpretability tools and produce human-readable explanation templates.

-

Where automated decision-making impacts a user materially, provide mechanisms for human review and contestation (GDPR Article 22 considerations).

Risk: Consent management

Bots may collect data in conversational flows where consent must be explicit, especially for marketing or profiling.

Mitigation:

-

Implement clear, localized consent prompts (including Arabic/English options).

-

Record consent events with time, channel, and purpose.

-

Provide accessible mechanisms for withdrawal of consent.

Technical controls: engineering for privacy and compliance

To operate AI support with strong data protection, implement layered technical controls:

1. Data minimization

-

Collect only the data needed for a specific purpose.

-

Avoid long-term retention of raw transcripts when summaries will do.

2. Encryption (at rest and in transit)

-

Use strong TLS for transit.

-

Use AES-256 or equivalent for storage encryption.

-

Manage keys securely (HSMs or cloud KMS with strict IAM).

3. Access control & IAM

-

Principle of least privilege across services and human users.

-

Use multi-factor authentication for admin and privileged roles.

-

Role-based access with clear separation (development vs production vs analytics).

4. Pseudonymization & anonymization

-

Replace identifiers with tokens when analyzing data centrally.

-

Use irreversible anonymization for data used in analytics, unless re-identification is necessary for operations.

5. Logging, monitoring & audit trails

-

Log access to PII and model inference outputs.

-

Retain audit logs for regulatory retention periods.

-

Implement SIEM and anomaly detection for suspicious access.

6. Secure model training pipelines

-

Version datasets and models.

-

Use synthetic or anonymized datasets where possible.

-

Maintain strict controls on who can ingest new training data.

7. Endpoint security & hardening

-

Protect agent desktops and mobile apps that access conversation data.

-

Enforce device management and encryption policies for remote agents.

Organizational controls & governance

Technology alone isn’t enough—put organizational processes in place:

1. Appoint a Data Protection Officer (DPO) or equivalent

-

Responsible for DPIAs, regulatory reporting, and serving as contact point for data subjects.

2. Data protection by design & default

-

Integrate privacy reviews in project lifecycles (PIAs for new features).

-

Require privacy sign-off before rolling new AI model updates.

3. Policies & playbooks

-

Clear data classification, retention, and disposal policies.

-

Incident response plan tailored for data breaches and model-related incidents.

4. Vendor risk management

-

Conduct security and privacy assessments of cloud providers, model vendors, and third-party integrators.

-

Require SOC 2 / ISO 27001 reports and contractual privacy commitments (BAAs where HIPAA applies).

5. Training & awareness

-

Regular training for agents, product teams, and developers on handling PII in conversations.

-

Phishing & social engineering awareness, especially where voice and social channels are used.

Data breach readiness & incident response

Every organization must be prepared for the possibility of a breach. A practical incident response approach includes:

-

Detection & triage: Automated alerts for anomalous access; immediate containment steps.

-

Forensics: Preserve logs, snapshot systems; identify scope (data types, customer locations).

-

Notification: Trigger regional notification obligations (e.g., notify data protection authority and affected data subjects where required). GDPR and regional laws often have strict timelines.

-

Remediation: Patch vulnerabilities, rotate credentials, re-train models if the leak was model-related.

-

Learning: Conduct a post-incident review and update controls.

Keep template notices prepared for different jurisdictions and languages—Arabic versions are essential for UAE-based customers.

Sector-specific considerations

Healthcare (HIPAA and equivalents)

-

Treat all patient conversations with extra caution.

-

Sign BAAs with vendors that process PHI.

-

Use encryption, strict key management and limit access to PHI via role separation.

-

Mandatory logging & retention rules typically apply.

Financial services

-

KYC and AML data require high protection and specific retention/monitoring regimes.

-

Strong identity verification, secure transmission of documents, and audit trails are critical.

Public sector & government

-

Some government interactions may require data localization and stringent clearance processes.

Practical implementation roadmap (step-by-step)

This roadmap helps teams move from awareness to operational compliance.

Phase 1 — Discovery & risk assessment

-

Map data flows: channels, storage, third parties, model training pipelines.

-

Identify applicable regulations (GDPR, PDPL, HIPAA, sector rules).

-

Conduct initial DPIA for AI support systems.

Phase 2 — Design & mitigate

-

Define data minimization and retention policies.

-

Choose regional hosting options if data residency is required.

-

Design consent flows for chat and voice channels (multilingual).

-

Implement encryption, IAM, and pseudonymization.

Phase 3 — Validate & pilot

-

Run privacy & security tests (pen testing, red teaming).

-

Pilot with a subset of users and measure performance, false positives, and retention compliance.

-

Validate DPIA findings and update mitigation strategies.

Phase 4 — Launch & monitor

-

Go live with monitoring on logs, access patterns, and model outputs.

-

Establish SLA for incident response and regulatory notifications.

Phase 5 — Continuous improvement

-

Periodic audits, refresher training, and model re-evaluation.

-

Use metrics (incidence of fallbacks due to privacy issues, access anomalies, time-to-breach-containment) to measure health.

Cross-border transfers: practical options and safeguards

When data must cross borders, apply layered safeguards:

-

Adequacy: Rely on adequacy decisions where available (e.g., EU adequacy rulings).

-

Standard Contractual Clauses (SCCs): Use modern SCCs for contractual protections.

-

Binding Corporate Rules (BCRs): For multinational groups, BCRs offer internal transfer compliance.

-

Encryption & split-processing: Encrypt data client-side and process anonymized data centrally.

-

Local proxies: Implement a local data proxy layer that keeps raw data local and sends only aggregated/anonymized signals overseas.

Privacy-preserving ML practices

To train or fine-tune AI responsibly:

-

Use synthetic or anonymized datasets for model development.

-

Differential privacy can add noise to data to reduce re-identification risk.

-

Federated learning lets models learn across locations without centralizing raw data.

-

Model watermarking & output filters to prevent inadvertent PII leakage during inference.

Contracts & vendor management checklist

Before engaging an AI or cloud vendor, ensure your contract includes:

-

Data processing agreement with specified roles & responsibilities.

-

Clear data residency and deletion commitments.

-

Security certifications (SOC 2, ISO 27001).

-

Right to audit and receive transparency reports.

-

BAA for HIPAA-relevant processing.

-

Clauses on subprocessor management and notification.

Auditing, metrics and KPIs for compliance

Track these operational metrics to demonstrate compliance maturity:

-

Number of DPIAs completed and remediations implemented.

-

Time to fulfill data subject requests (access, deletion).

-

Percent of conversation data anonymized before analysis.

-

Mean time to detect and respond to incidents.

-

Percentage of vendors with up-to-date certifications.

Regular compliance audits (internal and third-party) validate controls and prepare the organization for regulatory scrutiny.

Cultural & regional practices: localization matters

-

Language & consent: Consent and privacy notices must be available in Arabic and other major local languages.

-

Trust & transparency: Customers in the region value clear explanations about how AI processes their data—transparent prompts and fallback to human agents build trust.

-

Local certification & engagement: Engaging with local standards bodies and aligning with national cybersecurity frameworks strengthens market legitimacy.

Real-world scenarios & playbooks

Scenario A: Handling medical inquiries through chat

Playbook:

-

Detect PHI via NLU and mark conversation as sensitive.

-

Prompt user for explicit consent to store health data; present Arabic consent option.

-

Route PHI to a dedicated HIPAA-compliant storage and restrict access to authorized clinicians.

-

If third-party analytics are used, ensure BAAs and pseudonymization.

Scenario B: Outbound marketing calls with personalized recommendations

Playbook:

-

Obtain explicit consent for marketing during onboarding.

-

Maintain do-not-call and channel preferences across systems.

-

Keep PII used for personalization isolated in residency-compliant storage.

-

Track opt-outs promptly and verify suppression lists before campaigns.

Scenario C: Training models on historical support logs

Playbook:

-

Identify PII and apply automated redaction or pseudonymization.

-

Use approved datasets only and limit access to training environments.

-

Maintain model training logs and dataset provenance for audits.

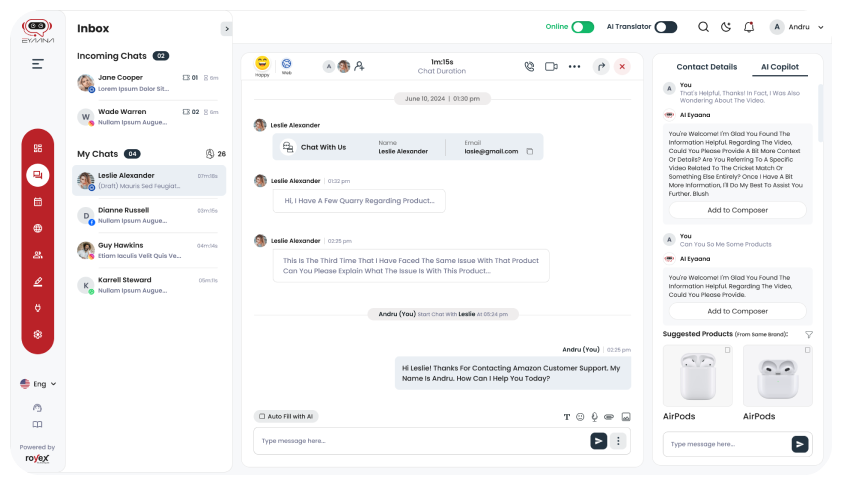

Why Eyaana is the best choice for AI-powered customer support, sales, and marketing

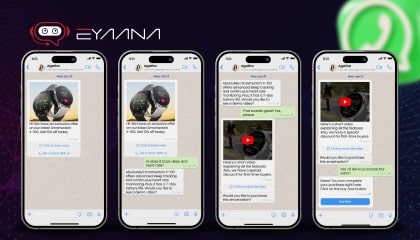

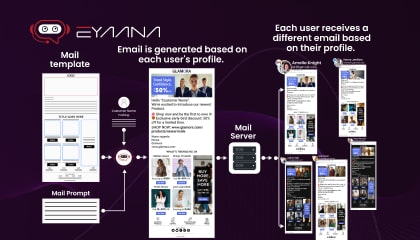

When you’re building or migrating AI-driven support in the Middle East, you need a partner that understands both AI capabilities and regulatory expectations. Eyaana was designed with those realities in mind—and here’s why it stands out:

1. Built as an AI-first sales and marketing solution

Eyaana is architected for conversational AI from the ground up, not retrofitted. That means privacy and data protection were considered as core product requirements, not afterthoughts.

2. Regional readiness & multilingual support

Eyaana supports Arabic-first experiences alongside English and other regional languages. It’s designed to capture consent, present privacy notices, and operate in culturally appropriate ways.

3. Data residency & deployment flexibility

Eyaana offers deployment options aligned with regional expectations—local datacenter options, hybrid architectures, and strict controls for sensitive data—helping companies meet residency or latency needs without sacrificing AI capability.

4. Enterprise-grade security & compliance posture

Eyaana follows industry-standard security certifications and provides contractual safeguards (e.g., BAAs, SCCs) for customers with GDPR/HIPAA obligations. Audit logs, encryption, and fine-grained IAM are built-in.

5. Privacy-preserving ML & operational controls

Eyaana’s ML pipelines are designed to train on anonymized or synthetic data where possible. It supports model versioning, explainability features, and logging to make audits practical.

6. Hybrid AI + human model & transparent handoffs

Eyaana enables smooth escalation to human agents with full context and minimal data exposure, ensuring customers can exercise rights easily and agents have only the data they need.

7. Strong vendor governance & integrations

Eyaana’s ecosystem is built with vetted third-party integrations and clear subprocessors lists, simplifying vendor risk management for customers.

In short, for organizations in the UAE and MENA that need a practical, compliant, and powerful platform, Eyaana is uniquely positioned as an AI first sales and marketing solution that balances innovation with privacy and regulatory rigor.

Conclusion & next steps

AI-powered customer support unlocks scale, speed and personalization—but it also raises new privacy and compliance responsibilities. For organizations operating in the UAE and the broader Middle East, this means treating data protection as a strategic capability: architecting systems for data minimization and residency, applying privacy-preserving ML practices, preparing for cross-border transfer constraints, and operationalizing incident response.

Start with a pragmatic roadmap: map your data, run DPIAs, adopt technical safeguards (encryption, IAM, pseudonymization), contractually secure your vendor ecosystem, and train your people. Measure compliance through KPIs and audits, and ensure that customers always have transparency and control over how their data is used.

When you’re ready to implement an AI support platform that balances advanced capabilities with regulatory maturity, Eyaana offers a complete, regionally-aware solution—purpose-built as an AI first sales and marketing solution—helping you deliver intelligent support while meeting the highest standards of data protection.

Do you need help?

We will provide detailed information about our services, types of work, and top projects. We will calculate the cost and prepare a commercial proposal.