Enquiry

Blog Details

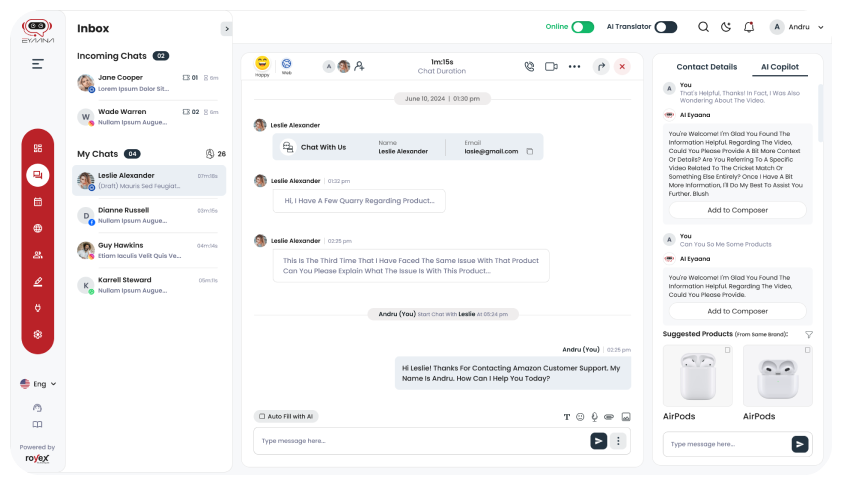

What Data Privacy Measures Do AI Chatbot Companies Follow?

In today’s digital world, data privacy is a major concern for both businesses and customers. With AI chatbots becoming an essential tool for customer service ai, people are increasingly asking: What happens to my personal data when I interact with an AI chatbot? Are my details safe? These are important questions, especially when chatbots are collecting, processing, and responding to customer queries that may involve sensitive information.

In this article, we’ll explain the data privacy measures followed by AI chatbot companies to ensure that customer data is protected and handled responsibly.

What is Data Privacy in the Context of AI Chatbots?

![what-is-data-privacy-in-the-context-of-ai-chatbots-min[1].jpg (1)](/media/dllfpde2/what-is-data-privacy-in-the-context-of-ai-chatbots-min-1.jpg)

Before diving into how AI chatbot app protect your data, let’s first understand what data privacy means. Data privacy refers to the protection of personal information that companies collect from customers or users. This can include anything from your name, contact details, and payment information to more sensitive data like medical history or financial details.

When you interact with an AI chatbot, it might collect and store some of this information to improve your experience or to help with processing your requests. However, for customers to feel comfortable using chatbots, businesses need to make sure this data is kept safe, used responsibly, and only shared when absolutely necessary.

AI chatbots can process large amounts of data very quickly. Whether it’s answering questions, handling customer service issues, or processing payments, chatbots work by analyzing and responding to customer inputs. With this type of interaction, there’s always the potential for personal data to be involved.

If not managed properly, this data could be misused, exposed to third parties, or fall into the wrong hands. This is why AI chatbot companies have strict data privacy policies to protect customer information and maintain trust.

Key Data Privacy Measures AI Chatbot Companies Follow

![key-data-privacy-measures-ai-chatbot-companies-follow-min[1].jpg](/media/swgpm3a3/key-data-privacy-measures-ai-chatbot-companies-follow-min-1.jpg)

AI chatbot companies implement several key measures to ensure that customer data is protected. Let’s take a look at some of the most common and important privacy practices they follow.

1. Data Encryption

One of the most fundamental measures in data privacy is data encryption. When you interact with a chatbot, the information you send (like your name, address, or payment details) is encrypted, meaning it’s transformed into a secure code that only authorized systems can decode.

Encryption ensures that even if someone intercepts the data while it’s being transmitted (for example, over the internet), they won’t be able to read it. This is particularly important when dealing with sensitive information, such as credit card numbers or login credentials.

Example: When you share your email address with a chatbot, the chatbot’s system encrypts the data to prevent unauthorized access.

2. Data Minimization

Data minimization is another important principle that AI chatbot companies follow. This simply means that they only collect the minimal amount of personal data necessary to perform the chatbot’s functions. For example, a chatbot may not need to collect your address if you’re simply asking for product details.

By collecting only what’s absolutely necessary, companies reduce the risk of exposing sensitive information. They also limit the amount of data they store, making it easier to manage and protect.

Example: If you’re interacting with a chatbot to ask about store hours, the chatbot may only collect your inquiry without asking for any personal information.

3. User Consent

In many countries, there are laws and regulations that require companies to obtain user consent before collecting personal data. AI chatbot companies must inform users about what data is being collected and how it will be used. They need to obtain explicit consent, which usually means that users must agree to the company’s privacy policy before using the chatbot.

This is often done by showing a pop-up or notification asking users for consent when they first start interacting with the chatbot.

Example: When you first use a chatbot on a website, you might see a message saying, “By continuing, you agree to our privacy policy,” which explains how your data will be handled.

4. Anonymizing Data

Anonymization is the process of removing any personally identifiable information (PII) from the data that the chatbot collects. AI chatbot companies often anonymize data to protect privacy while still being able to use the information for improving services, analyzing customer interactions, or training their AI systems.

By anonymizing data, companies can still gain valuable insights without compromising user privacy. This is particularly important in situations where the chatbot collects large amounts of customer data for analysis, such as detecting common queries or issues.

Example: If a chatbot collects information about customers' preferences, it may strip away any personally identifying details like your name or contact number, so that the data can be used to improve the chatbot’s service without revealing your identity.

5. Compliance with Data Privacy Laws

AI chatbot companies must also follow local and international data protection laws. Some of the most well-known laws include:

-

GDPR (General Data Protection Regulation): A European Union law that regulates how companies handle the personal data of EU residents. It requires companies to get explicit consent, allow customers to access their data, and delete data upon request.

-

CCPA (California Consumer Privacy Act): A California law that gives consumers the right to know what data is being collected about them and request its deletion.

-

HIPAA (Health Insurance Portability and Accountability Act): In the healthcare industry, AI chatbots must comply with HIPAA, which ensures the privacy and security of health-related data.

By complying with these laws, AI chatbot companies demonstrate their commitment to protecting customer data and following best practices in privacy.

Example: If you’re interacting with a chatbot based in the EU, the company must follow the GDPR rules to protect your personal data.

6. Secure Data Storage

Once data is collected and processed by a chatbot, it needs to be securely stored. Companies use secure servers, firewalls, and other protection mechanisms to ensure that stored data cannot be accessed by unauthorized users.

They may also implement regular security audits to identify potential vulnerabilities and ensure that their systems are up to date with the latest security protocols.

Example: The chatbot might store your data in an encrypted database that requires a password and other authentication methods to access it, ensuring that only authorized staff can retrieve it when necessary.

7. Transparent Data Practices

AI chatbot companies are also committed to being transparent about how they use and store data. This is why businesses that use chatbots will often provide you with a privacy policy or terms and conditions when you begin interacting with their chatbot.

These documents explain what data is collected, why it’s collected, how long it will be stored, and how you can request access or deletion of your personal data. Transparency helps build trust with customers and ensures they know their rights when using AI chatbots.

Example: Before using a chatbot on a retail website, you may be asked to review the privacy policy to understand what information the chatbot will collect (e.g., your order history or preferences).

8. Regular Updates and Security Patches

AI chatbot companies are always working to improve their systems and software. This includes updating their security protocols to protect customer data from emerging threats like cyberattacks or hacking. Regular security patches help ensure that vulnerabilities are addressed as soon as they are identified.

By staying up to date with the latest security trends, companies can protect their chatbots—and, by extension, their customers—from potential breaches.

Example: If there is a new type of cyber threat, a company may release an update to its chatbot’s security features to ensure that customer data remains safe.

Conclusion

As AI chatbots become an essential part of customer service, businesses have a responsibility to protect the personal data of their users. By following data privacy best practices, such as data encryption, minimizing data collection, anonymizing data, and complying with privacy laws, AI chatbot companies can provide a secure and trustworthy service to customers.

For customers, it’s always important to be aware of the privacy policies of the chatbots you interact with, and to ensure that the company you’re dealing with follows the right measures to keep your data safe.

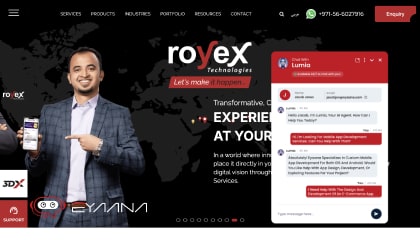

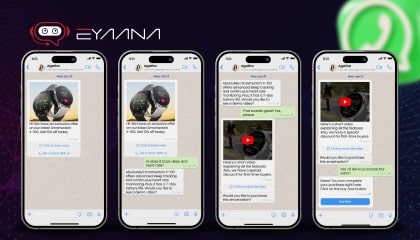

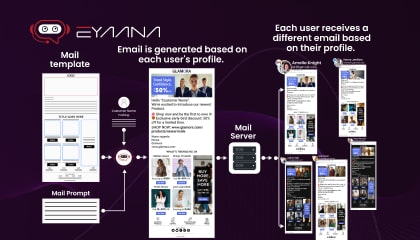

If you’re looking for a chatbot solution that prioritizes data privacy, Eyaana, AI Chatbot app is an excellent choice. Eyaana follows the highest standards of data protection, ensuring that customer interactions are encrypted, personal data is minimized, and all necessary privacy regulations are met. With Eyaana, businesses can confidently offer AI-powered customer service without compromising their customers' privacy.

To explore how AI can enhance your business operations, sign up for free and get started with Eyaana today.

Do you need help?

We will provide detailed information about our services, types of work, and top projects. We will calculate the cost and prepare a commercial proposal.